Haptic Glove with Haptic Feedback for Elastic Objects at a Low Cost

Article information

Abstract

As the necessity of haptic technology in the metaverse area has been increasing, a number of glove-shaped haptic devices have been developed and commercialized. However, current commercialized devices are highly expensive for not only developing countries but also for average individuals. Therefore, this paper sets out to present an affordable and practically usable haptic glove. The three main elements contested in this paper are Leap Motion Controller (LMC), which is used to improve and stabilize the recognition of hand tracking in Unity. Elasticity, which pertains using string-based haptic feedback wherein our Haptic Glove generates an elastic effect of a virtual object. Lastly, an EMG sensor: By connecting the EMG sensor and the Haptic Glove, this paper proposes the possibility of expanding the use of the Haptic Gloves for fine motor development and rehabilitation.

Introduction

This paper intends to aid user experience by implementing a more immersive metaverse at a low cost by providing them with an affordable Haptic Glove, in which users can feel kinesthetic feedback including the elasticity of virtual objects. The majority of the current metaverse content lacks user immersion due to the absence of haptic feedback. When visual, audio, and haptic technologies are combined, it allows for an ideal metaverse experience. A lot of recent developments have been made for visual and audio industries, and are already distributed to the public. The haptic industry has grown significantly and a number of haptic devices have been made for research and commercial uses. Despite which, haptic devices are too expensive for developing countries and individuals to use (Kwon, 2018).

The CyberGrasp is one example of an high-end quality Haptic Glove. They used a cable-driven mechanism in order to decrease the volume and weight of the product (MA and BenTzvi, 2015). One actuator is attached to each finger so a total of five actuators are used for the glove. Through an exoskeleton structure, it creates a resistance force of up to 12 N continuously on each fingertip, and its weight is 450 g.

TESLAGLOVE from Teslasuit (2023) provides tactile and kinesthetic haptic feedback. TESLAGLOVE also uses an exoskeleton structure to provide resistance force up to 9 N, and 2.8-3.3 kg.cm torque on fingers. To use a hand tracking system, external hand controllers such as HTC Vive Trackers or Meta Quest2 Controllers.

DEXMO Development Kit 1 (Dexmo) from DextaRobotics provides kinethetic haptic feedback with a high torque of 5 kg.cm. Since a person can make 7 kg.cm torque on average, 5 kg.cm torque is not dangerous. It has a small weight of 300 g (Dexta Robotics, 2017).

These haptic gloves provide high-quality haptic feedback, however, their current prices remain as obstacles for developing countries and hinder individual users ability to experience metaverse with these haptic gloves. In other words, a low-cost haptic glove is necessary for people who want to research or experience a more immersive metaverse with a limited budget. On the other hand, there has been research done for a low-cost haptic glove. The glove consists of a cable-driven mechanism with strings and servo motors which were used for their kinethetic haptic feedback. For tactile haptic feedback, they used an LRA motor and for the hand tracking system, they used Flex Sensors, MPU-6050s (IMU sensor), open-source Mediapipe of Google. This method allowed them to implement a hand tracking system at a low cost. However, they had to face trade-offs such as low accuracy of hand recognition, delay of hand tracking that the user could feel because of a low framerate and 2-dimensional movement without an axis of z due to one single camera tracking. They were able to implement kinesthetic haptic feedback at a lowcost thanks to the servo motors (SG-90), which can generate 1.8 kgm/s torque. Its torque was strong enough to stop the users finger. The string that is attached to the users fingertip and the motor stops the finger from moving forward when it touches a virtual object (Stop Motion feedback) (Jang et al., 2022).

To provide haptic feedback to users for better immersion in the metaverse through a haptic glove, firstly, the virtual hands must follow the users hands without any disconnection and delay. A low recognition, disconnection, and delay of hand tracking could decrease immersion of the metaverse (Li et al., 2015). Every object has its own elasticity. Due to the elasticity and the external pressure on the object, the deformation occurs on the object generating elastic force and repulsive force accordingly. When a user interacts with a virtual object expecting it to be soft and malleable, if the user can get only Stop Motion feedback (stopping a user’s finger moving forward when the user touches an object), the gap between the expectation of user and actual feedback will cause a reduction of a sense of immersion. Therefore, this paper presents a small, compact, inexpensive haptic glove that can provide kinesthetic haptic feedback including the elastic force with high quality of hand tracking, so that even developing countries and individual users can experience a more immersive metaverse.

The glove was designed to have a high hand tracking recognition rate when using LMC even after wearing the Haptic Glove, and it was designed to release the thread when pushing down an elastic object so that the elasticity of the object can be felt. In addition, demo content was produced to create opportunities to help develop and rehabilitate fine motor by connecting EMG sensors and the Haptic Glove.

Materials and Methods

1. System diagram

Our system consists of a Haptic Controller, Unity Engine, Pose Data (Hand Tracking), and EMG Data.

Users can play our demo content with our Haptic Glove. Using Leap Motion Controller (LMC), the users hands will be tracked and then the Pose Data will visualized in Unity as virtual hands. User can interact with virtual objects with their visualized virtual hands in Unity. When the user touches an object in Unity, The data that is needed to control the Haptic Glove will be computed and collected. The data will be passed to the Arduino UNO through a serial connection (Figure 1).

When a collision occurs between a virtual object and the users virtual hands, the virtual object will deform according to the elastic property of the object and the pressure the user applied. The deformation of the object will be visualized in Unity as well. The pressure data that are applied on each finger (Index 1, Index 2, Index 3: start from the thumb), and the elasticity of the touched object will be transferred to Arduino UNO. The data that Arduino UNO received will be calculated as angle data to control the servo motors. With all these processes the user will be able to feel the virtual object and its elasticity. Whilst the user is interacting with the virtual object, using EMG sensors that are attached to the users arm and the back of their hand, EMG data will be collected from Arduino UNO. The collected EMG data will be visualized as a graph UI in Unity as well. The EMG data was added to be utilized for future research and the possibility of expanding the use of Haptic Glove for fine motor development and rehabilitation.

2. Object deformation in unity

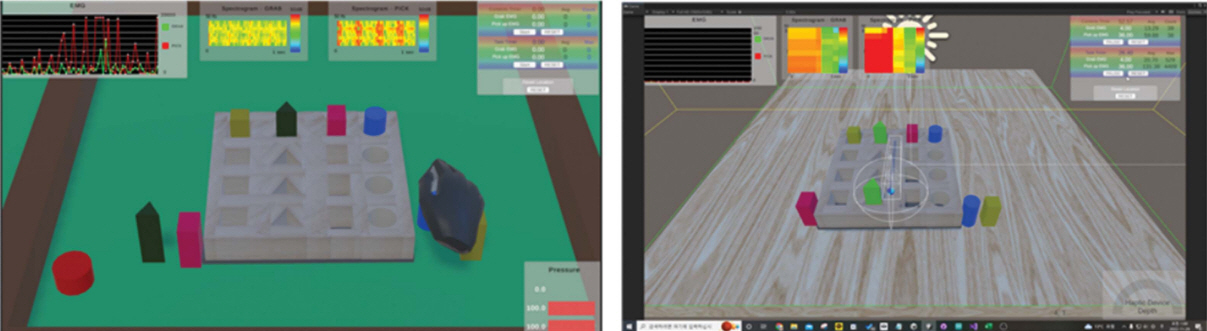

The demo content for this paper is implemented in Unity Engine. The overall look of the demo content is shown in (Figure 2). There are three virtual balls that have different elastic properties and they are also deformable according to their elasticity. The red ball is soft, the yellow ball is a steel ball, which is not deformable as well, and the football has a middle elasticity.

In computer graphics, a three-dimensional object is defined as a mesh composed of polygons. A polygon represents a triangle consisting of three vertices. When a hand-tracked virtual hand touches a deformable object, the information on the vertices of the object will be updated according to the pressure applied by the user’s virtual hand in real time in order to deform the object. The process of deformation of an object consists of 3 phases starting from pressure on the surface of the object from a user, force of elasticity, to attenuation (Flick).

The direction vector of movement of the object’s vertex heads from the contact point to the vertex. According to the Inverse Square Low, the pressure that the vertex receives from the users finger is inversely proportional to the square of the distance between the contact point and the vertex. The distance could be less than 1 m depending on the resolution and size of the object’s mesh, so we set the force equation that the vertex receives as 1/(1 + α x)2 (α = attenuation coefficient, x = distance between the contact point and the vertex).

The elastic force (Linear Stiffness) equation, F equals k × d. F = kd (k = elasticity, d = the distance) The elastic force decreases as time goes on. According to this formula, we visualized the deformation of objects. And we tuned the maximum distance where each object can deform experimentally considering each object’s elasticity and size. Utilizing this maximum distance, we visualized the UI of what percentage of an object deformed on the right side of the bottom in Unity (Figure 2). This information is utilized for controlling the servo motors as well. The first gaze bar of the deformation rate UI is for the finger Index 1. The second and third bars are for finger Index 2, and Index 3. It shows the ratio of the current degree of deformation and the maximum degree of deformation. 0.0 means it didn’t deform (No touch), and 100.0 means it deformed maximum amount. In other words, as soon as the yellow iron ball is touched, the gaze bar will reach 100.0, so that deformation doesn’t occur. On the other hand, the gaze bar of the rubber ball will increase gradually, as the pressure increases until it reaches 100.0.

3. Haptic glove

This paper intends to develop a low-cost Haptic Glove that even developing countries and individuals can afford. Our objective is to make a Haptic Glove that is not only inexpensive but also practically usable for the public. Given the main goal, we have chosen components of the glove such as actuators, hand tracking method, color of gloves, and so on considering the trade-offs. The most expensive component for the prototype was LMC, which was about 100 US dollars (about 120,000 KRW).

The two methods had been considered for kinesthetic haptic feedback. One was an exoskeleton method, and the other one was a string-based method. The string-based method was chosen because it was considered to be less expensive and lighter compared to the exoskeleton method. Both methods were evaluated as they had a relatively high accuracy. However, the exoskeleton method was evaluated as it was too complicated for the user who is not a specialist to use (Achberger et al., 2021). Given this information, we have chosen the stringbased method concurring that it suited this paper’s goal.

Then to generate the kinethetic haptic feedback with a string, two methods were considered. The first one was using a DC motor with an encoder, and the second was using a servo motor, a potentiometer, and a restoration spring. To provide a force on the users finger in real-time through a string, the string must be tightened always, so that it can push back the users finger immediately. Therefore when the user moves a finger, how much of the string unwound or wound must be tracked in real-time in order to keep the string tightened and calculate how much of the string should be unrolled or rolled.

With the first method, unlike the servo motor that works with a closed loop, the DC motor can be revolved as the users finger moves even when the power is provided to the DC motor. Meanwhile, the encoder senses the amount of rotation of the DC motor. Once the amount of rotation of the motor is calculated, how much of the string should be unrolled or rolled can be calculated as well. Given that, it is possible to keep the desired length of the string.

On the other hand, for the second method, a restoration spring is used to keep the string tightened. As the user bends their finger, the string and spring will be unrolled. If they stretch their finger, the string will be rolled and because of the spring. In this way, the string keeps staying tight. A potentiometer has an analog value range from 0 to 1023, and it rotates from 0 to 180 degrees. The potentiometer is attached to each end of the three strings. Therefore the potentiometer rotates as much as the string moves. This means the current length of the string can be tracked in real time. In this way, the string also can be kept tightened.

The given method allows the user to keep the string tightened and track the length of the string. However, there is one prevalent issue, the Servo motor works in a closed-loop system, which means once the power is provided to the motor, it tends to keep trying to stay at the angle it receives. In this state, if the motor receives external force more than it can endure to rotate it, the motor will break down due to the overcurrent. To solve this problem, we calculate the ideal angle of the servo motor through the tracked data of the string. Then if there is no touch keep the actual angle of the servo motor 1 degree behind the ideal angle so that the user can move their finger freely. Also, if a collision occurs, the servo motor angle can be located in the ideal position immediately.

After comparing trade-offs between the first method and the second method, in this paper, the second method has been adopted. This is due to the fact that the size and weight of the DC motor with an encoder provided an uncomfortable feeling to users. There were relatively smaller and lighter DC motors, however, their prices were too high for the objective of this paper.

Alternatively, servo motors are characterized by their low prices and lightness. According to Jang et al. (2022), they used servo motors (SG90) for their low-cost haptic glove. Since there was open-source 3D Modeling of components for haptic gloves with servo motors, it helped us to reduce the time and effort of designing the components. The second method applies well to interacting with rigid objects. When the user touches a rigid object, since the object doesn’t deform, the users finger will stop from the contact point. The method where when the user touches a virtual object, stopping their finger from moving forward is enough for the above scenario. This paper presents not only the Stop Motion feedback but also Elastic force feedback (Linear stiffness feedback). To express the elastic force feedback, the surface of the deformable object must be pushed down after a collision between the users finger and the object happens. The elastic force equation is F = k×d (where ‘k’ is an elasticity coefficient, and ‘d’ is a distance of how much the surface gets pushed down from the origin position).

To let the user feel the distance when the object deforms, the servo motors should keep pulling the users finger so that the user still can feel the elastic force. However, a servo motor that uses a closed loop will break down if the pressure that the user applies is higher than the limit the servo motor can endure. To solve this issue, in this paper, we utilized the pressure data that is calculated in Unity. The maximum range of deformation was set earlier. As the finger presses the object, the string will be released by the amount of change in the pressure until the pressure ratio reaches its maximum. However, when we released the string in real-time as the user pressed the object, the user could not feel the resistant force, because the string was released, even before the user could feel the force. To provide enough time for the user to feel the force, 20 ms of delay was given between updated angles of the servo motor.

4. Analysis of the precision of the leap motion with haptic glove

In the paper of Jang et al. (2022), Mediapipe of Google was used to implement low-cost hand tracking on their system. This method, which is based on a single camera has issues such as an unstable recognition of hand tracking, inaccurate position data, especially z-axis data, and delay during sending the position data to Unity using socket communication. They commented that these issues could cause a decrease in the immersion of users. This paper has tried to solve these issues so that we can present a Haptic Glove that is not only inexpensive but also practically usable. LMC (Leap Motion Controller) is around 100 US dollars, which could come across as quite expensive. Despite the price of LMC, we adopted LMC for our hand-tracking system because of its stability, and accuracy. Users who participated in our interview, which will be described later, mentioned they did not see any delay with their virtual hands. While we were designing our demo content before completing our Haptic Glove, we tested our demo content without our Haptic Glove. The hand tracking and visualizing virtual hands were highly accurate and stable. However, when we tested our demo content again with our early prototype of the Haptic Glove, the hand tracking was markedly unstable. LMC uses two infrared stereo cameras with three infrared LEDs. It calculates depth information of objects sensing how much time the infrared light takes to hit the objects and get reflected back to the sensor. The detailed functions of LMC are patent-protected. LMC estimates the depth information based on a stereoscopic view of the scene (Vysocký et al., 2020).

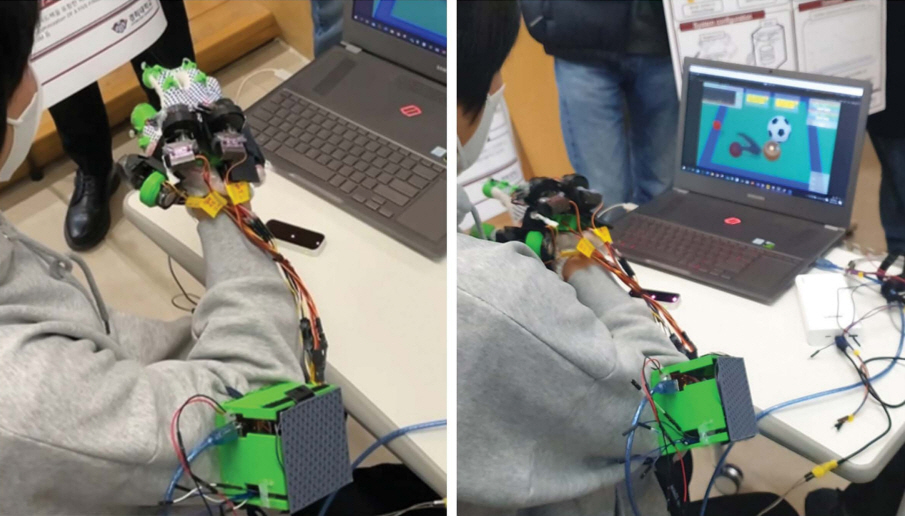

The glove that was used for our early prototype was a black color glove. When we used this glove to test our demo we faced the unstable recognition issue of the hand tracking. The reason for this issue was because of the color of the glove and the components that were attached to the back of the glove. LMC calculates distance information of objects using infrared light. If an object is dark or black in color, it absorbs most of the light. If the object does not reflect light back to the sensor, LMC won’t be able to estimate the depth information. For that given reason, a white color glove has been chosen so that it can reflect most of the light (Figure 3). If objects that have similar depth information as the users hand are on the glove, it causes an instability of hand tracking. To alleviate this problem, only a minimum of necessary components were placed on the back of the glove, and the other parts were collected and packed in a box case that can be worn on the users forearm with velcro. Also, a soft plastic foam was inserted between the back of the glove and the remained components to give a slight height difference.

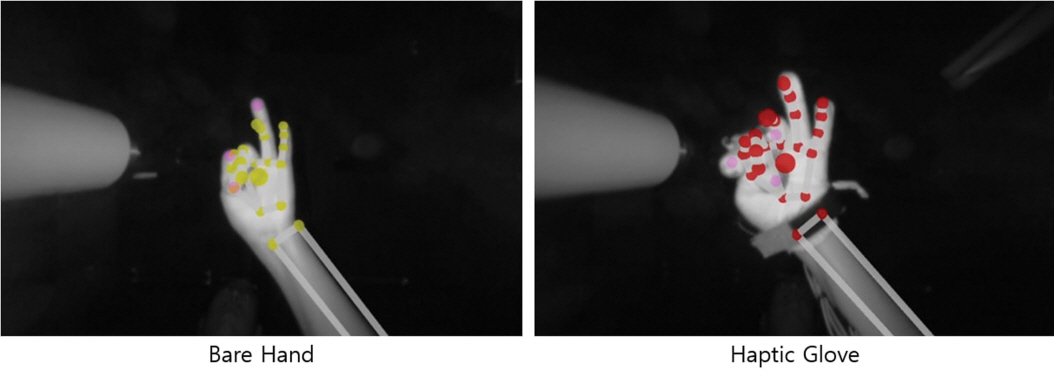

An experiment was conducted to compare the recognition rate of hand tracking with a bare hand and our redesigned Haptic Glove. The result is shown in (Table 1). Several tasks were set such as clenching and opening a fist, picking action with two fingers, bending fingers one by one, and so on. We tested the same tasks for a bare-hand test and a Haptic Glove test. For each test, the total number of tasks was 600. As a result of the experiment, there was no disconnection of hand tracking out of 600 tasks for the bare hand test and there was only one-time disconnection for the Haptic Glove test. The 600 tasks of each test were operated with the palm-facing LMC (front-side task). On the other hand, with a task of flipping a hand, the outcome was different. There was no disconnection with the bare-hand test. However for the Haptic Glove test, out of 27 trials, there were 8 times of no issue hand tracking and 14 times of great hand tracking with short disconnection. In other words, there were 19 times of disconnection out of a total of 27 times. As a result, the recognition rate of hand tracking with the front side task was 100% for the bare hand test and 99.83% for the Haptic Glove test. However, for the flipping task, the recognition rate was 100% for the bare hand test and 29.63% for the Haptic Glove test. Therefore our Haptic Glove is recommended for content where front-side tasks are mostly used. The demo content used for this paper were all front-side tasks, so there was no user who expressed any discomfort for hand tracking.

Additionally, we analyzed the matching rate of a real hand and a tracked virtual hand. The matching rate test was about analyzing how accurately the joints and fingertips of a real hand and a tracked virtual hand match. We recorded the whole process of tasks that we used for the recognition rate test and took screenshots of each task. A 30 by 30-pixel circle is located on a joint or fingertip of a real hand that does not properly match. If the circle is completely apart from the point of a virtual hand, the circle is counted as a mismatched circle (Figure 4). The result was different from the recognition rate test. Overall the matching rate of the Hatpic Glove was higher than the matching rate of the bare hand. We analyzed the result from two aspects, a motion aspect, and a finger aspect. From 5 motions out of 12 motions, the Haptic Glove got a higher rate. And from 6 motions the Haptic Glove and the bare hand got the same rate. Only from one motion the bare hand got a higher rate (Table 2). When it comes to the finger aspect analysis, the Haptic Glove won from index 1, 2, and 3 fingers. For the index 5 finger both ways got the same matching rate. For the index 4 finger the bare hand won over the Haptic Glove (Table 3).

When the pink circle and the yellow circle of the virtual hand are completely apart from each other, count one as a mismatched one.

The bare hand got a slightly higher rate for the rate of recognition of hand tracking. The Haptic Glove could get a high rate of recognition only for the front-side tasks. Which is the limitation of our Haptic Glove. On the other hand, the result of the matching rate shows that the Haptic Glove generally has a higher matching rate. We suppose the reason is because the Haptic Glove makes fingers look wider than a real hand due to the plastic components on fingertips.

5. EMG data analysis

We wanted to design our demo content as one of the examples where it can be actually utilized. A number of researches of convergence of haptic technology, fine motor, or EMG sensor have come out such as research about the correlation between fine motor development and haptic technology, fine motor rehabilitation using haptic technology, fine motor rehabilitation treatment using EMG sensors, and rehabilitation treatment using LMC.

To be more specific, there was a haptic cognitive training study on the developmental coordination disorder (Wuang et al., 2022), a patent for a hand rehabilitation system based on hand motion recognition (Kim, 2015), a multi-finger control study for patients with cerebral palsy (Park and Xu, 2017), and a haptic-gripper study for analysis of fine motor behavior in autistic children (Zhao et al., 2018). From this background, we designed the demo content where a haptic glove and an EMG sensor can be utilized together for fine motor development and rehabilitation. We also did an experiment to check the correlation between a haptic glove and fine motor. However, this experiment was operated with only one user. The purpose of this simple experiment was to identify the potential of utilization as demo content. We only recommend seeing this experiment as a simple reference.

To sense the EMG signal, we used two SEN0240-DFRobot EMG sensors (Figure 5). Each sensor was attached to the users forearm for grab-motion detection and the back of the users hand for pick-motion detection. However, when the user moved their hand a little bit of a gap occurred between the back of the users hand and the sensor. From the gap, a lot of noise came in. To prevent getting the undesired gap, we used an elastic strap to fix the sensor tight on the back of the users hand.

Another demo content was designed for the EMG analysis. We bought and measured a real learning toy for matching color shapes for infants and toddlers (Figure 6) and made a 3D model of the learning toys (Figure 7).

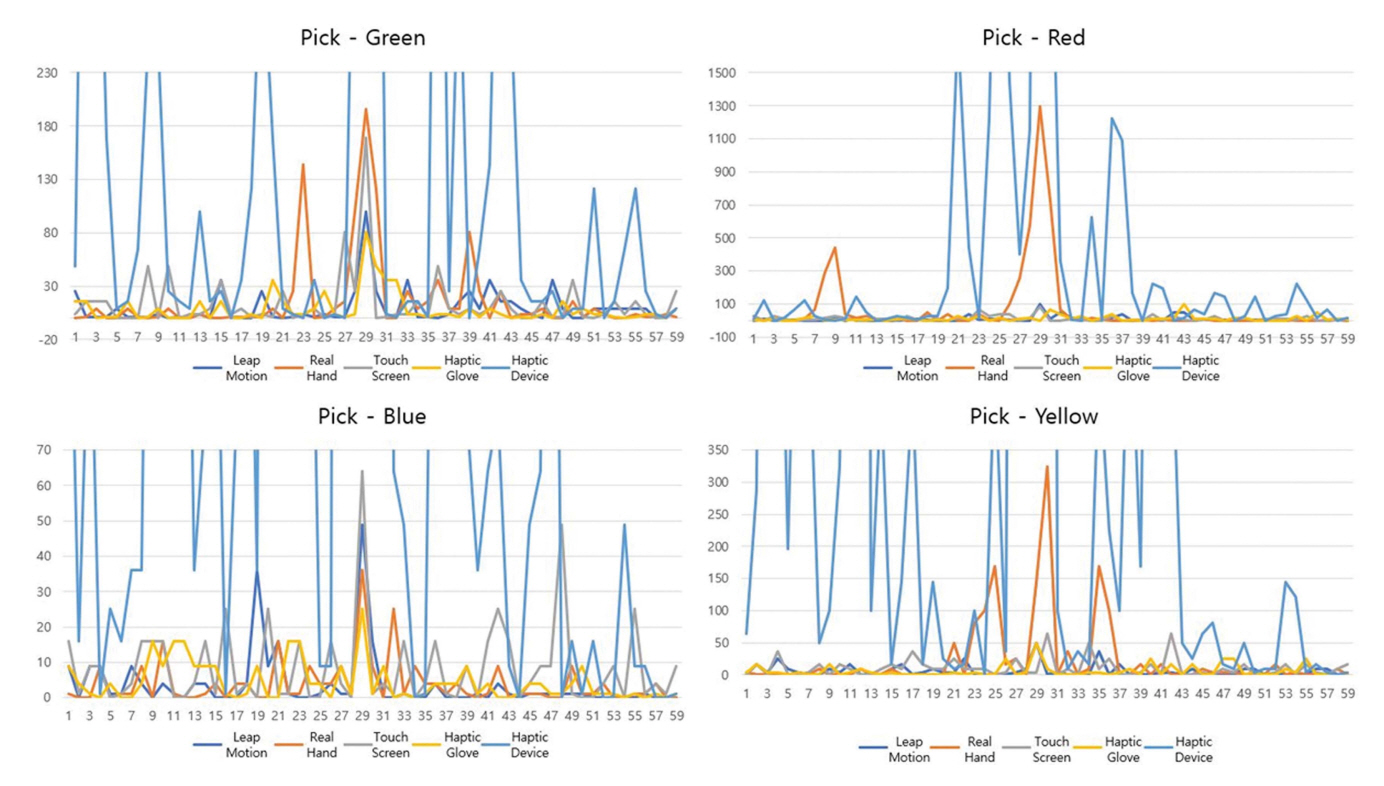

We set the task for EMG measurement, which is picking up a certain color and shape object, and fitting it in the right location. All EMG measurement for each content such as the real learning toy, Leap Motion, touch screen, Haptic Glove, and Haptic TouchX, was operated with the same task (Figure 8, 9).

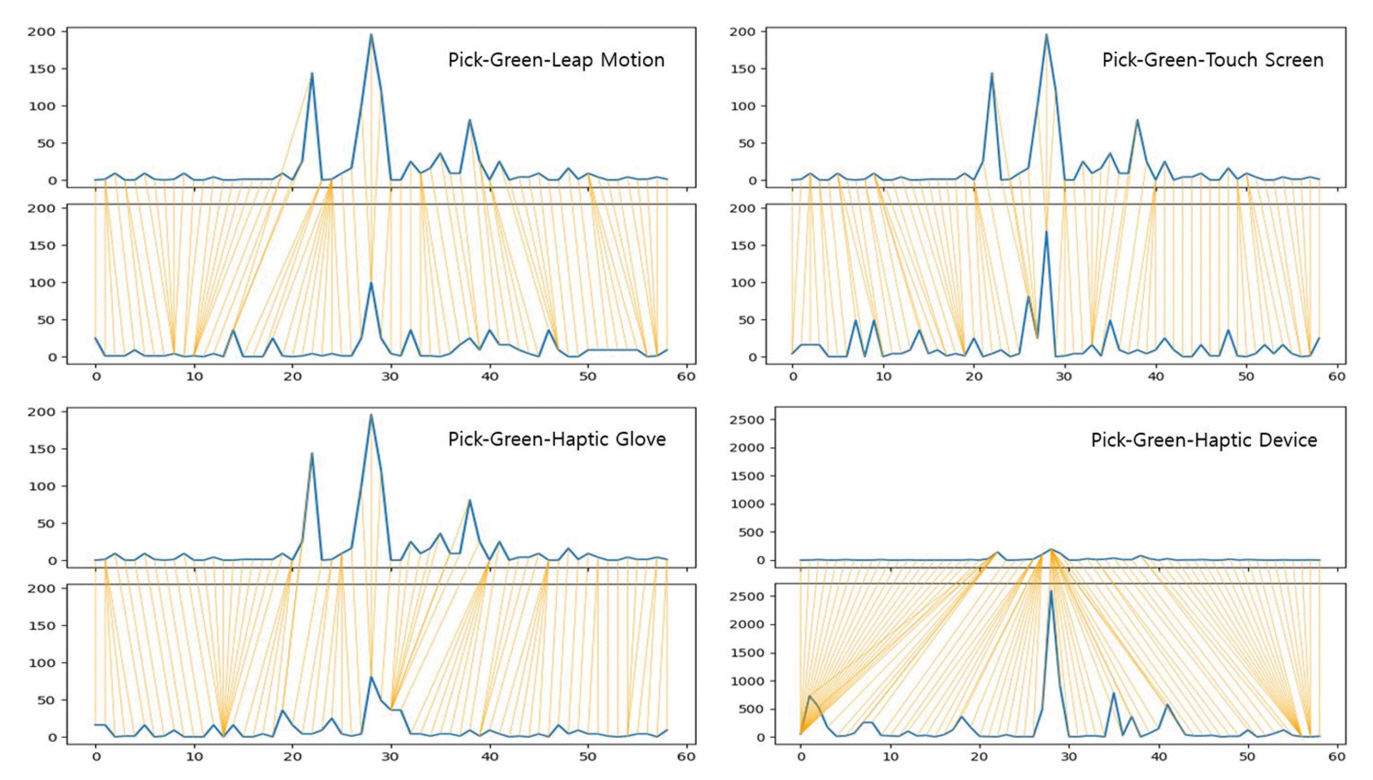

Constantly the EMG data is printed in a CSV file. Meanwhile, it counts numbers every 10 seconds, and for every count, the mean and maximum values are printed in the CSV file as well (Table 4). The whole data was separated by the count number. We extracted a certain range graph for each of the separated data. The range of the graph was specified as +-3 seconds based on the maximum value of each count number. (Figure 10, 11) shows the overall tendencies of EMG data from each content. The meaning of ‘Grab-Green’that is on the left top figure is the EMG data from the sensor located on the forearm for grab detection interacting with a green object (Figure 10).

Comparing the tendencies of Grab motion EMG data graphs for each content (Leap Motion, Real Hand, Touch Screen, Haptic Glove, Haptic Device).

Comparing the tendencies of Pick motion EMG data graphs for each content (Leap Motion, Real Hand, Touch Screen, Haptic Glove, Haptic Device).

The EMG data graph for each content was analyzed using DTW (Dynamic time wrapping) method with Python. First of all, using Openpyxl, we stored all the EMG data from the CSV file and then parsed all data into each content list. Each content EMG data was compared with the EMG data of the real learning toys (Figure 12, 13).

DTW analysis of Grab motion EMG data for each content. Comparing the tendencies of the real hand graph (the above graph) and each content’ graph (the below graph).

Results and Discussion

1. User experience analysis for virtual elastic objects

We interviewed four users to evaluate the elasticity feedback from our Haptic Glove. The users experienced the demo content without the Haptic Glove first. Secondly, they were asked to grab real balls (a soft rubber ball, an elastic hard ball, and a completely solid ball) several times. Then they were asked to grab virtual balls (a soft rubber ball, an elastic hard ball, a completely solid ball) several times, and compare the interactions between the real balls and virtual balls. The users were asked to freely describe the naturalness, recognition rate, and haptic feedback of virtual hands and the elasticity of virtual objects. We received similar answers from all four users.

Four users all said they could feel the realistic feeling of grabbing a virtual object and also the feeling of elasticity that differs according to the properties of objects. They also mentioned the hand tracking was so smooth that they did not feel uncomfortable during the interview. However, they also said the feeling of the elasticity of the objects was a bit far from the realistic feeling they were expecting to receive. The reason for which is because of the 20 ms delay we had to apply between the updated angle of the servo motor. In an actual environment, as a user grabs or pushes an object, the user receives continuous force feedback. This 20 ms delay makes the force feedback discontinuous.

During this interview, we also proceed with an EMG analysis (Figure 14). With the result of the EMG analysis, we couldn’t find a clear correlation between the EMG data from interacting with real objects and the EMG data from interacting with virtual objects with our Haptic Glove. Before the interview, we presumed that the larger the elasticity of an object is the higher the EMG data will be. Generally, the EMG data was high when the elasticity was high. Sometimes, however, the EMG data from the softest ball and the second softest ball was higher than the EMG data from the solid ball. Also, it was confirmed that even between the softest balls and the solid ball, the magnitude relationship changed without a clear correlation.

Four users’ EMG data graphs of interacting with real objects and virtual objects with Haptic Glove. We could not find the association between Haptic Glove data and real hand (real object) data. However, we explained the assumption of the reason for this irrelevant tendency.

The same phenomenon also happened during interacting with real objects. The reason why the result was different from our preliminary hypothesis as presumed is because people tend to grab and pick an object with variable strength regardless of the object’s elasticity. Even when the same person grabs an object several times, the amount of the users muscle usage could be different for each time. Therefore if other people do it the variability of the EMG data could be higher. Another possible reason is that the matter of how strong a user grabs and picks an object tends to depend on the object’s mass more than the object’s elasticity. This is because if an object is light enough it barely takes muscle usage, and if an object is heavy, it takes a lot of muscle usage.

2. EMG data analysis for correlation between haptic and fine motor

EMG data was analyzed by conducting the same task where a user moves certain color objects to certain locations for each content (the real learning toys, Leap Motion, touch screen, Haptic Glove, Haptic TouchX). This experiment also shows a bit different result from the result we expected. Our hypothesis was that due to the force feed of our Haptic Glove, the Haptic Glove would get the highest score and LMC would come next for how much the tendency of each content’s EMG data matches the tendency of the real learning toys’ EMG data. DTW data shows how different the two graphs are (Table 5). If result of DTW is lower it is more similar to the real learning toys data. The result was that the LMC data got the highest point and our Haptic Glove got the second highest (Table 6).

Firstly, for the grab-motion result where the EMG sensor was attached to the users forearm, LMC data won first place for DTW analysis, and in second was the Haptic Glove. The gap between the first place and the second place was very slight. The touch screen data and Haptic TouchX data took third place and last place respectively. As we assumed the Haptic Glove data and LMC data would be higher than others the result was the same. However, the LMC has a slightly higher point. A further analysis of this result will be needed in subsequent studies.

Secondly, the pick-motion result where the EMG sensor was attached to the back of the users hand, has a similar result. LMC data again won first place for DTW analysis, and the Haptic Glove and touch screen data took second place together. From first place to third place, there were only small gaps among them. We found out that conducting the task in the touch screen content shows a quite similar tendency of EMG data with the real learning toys. The reason for its similarity was analyzed as it is because the motion of picking up and moving a light real object using a real hand is also highly similar to the motion of conducting the task in the touch screen content. LMC data was also slightly higher than the Haptic Glove data for the pick-motion.

The Haptic TouchX data shows the lowest similarity from both grab and pick motion data. The reason for which is because of the characteristics of the device. Haptic TouchX is a grounded device, which means it can provide a force feedback of an object’s mass. As mentioned earlier, in general, a heavier object requires more usage of muscle to pick up, the EMG data of Haptic TouchX was a lot higher than others. The fact that the users used more amount of their fine motor skills with Haptic TouchX implies that the method has a higher possibility of helping users fine motor development and rehabilitation, even though it could be the most different method from an interaction with a real object. For a more precise further analysis, the mass of the real object must be measured and applied for the Haptic TouchX content as well.

The insight we were able to find out from the result is that when the interaction that requires the same or similar action of hand, the tendency of EMG data is also close to an interaction with a real object. That is why LMC and Haptic Glove have a high score to EMG data analysis and took first and second place respectively. However, the result that LMC data where a user cannot get any force feedback during the task got higher similarity than the Haptic Glove data needs to be analyzed in future research with more interviewees and more complex tasks. The tasks used for this paper were so simple and basic that they did not require that much complicated usage of fine motor skills. To find out more accurate correlation between haptic feedback and fine motor development and rehabilitation, more complicated and sophisticated tasks will be required.

Conclusion

This research has been conducted with the aim of creating an inexpensive, compact, practically usable, and wireless wearable haptic glove that can also provide the feeling of the elasticity of a virtual elastic object. In the process of designing several prototypes, we had faced a few trade-off issues. To solve these issues we had to compromise and circumvent the problems at hand. As a result, we were able to create our Haptic Glove with around 16.3 US dollars (around 21,780 KRW) for the glove itself. For more detailed information on the price, the three potentiometers (1,500 KRW, 500×3), the three badge reels (2,370 KRW, 790×3), the three MG-90 servo motors (9,510 KRW, 3170×3), the Arduino UNO and the USB cable (6,400 KRW), one pair of the white gloves (1,000 KRW), excluding the 3D printed components. With the two external equipment (two EMG sensors, 88,040 KRW, and one LMC, around 120,000 KRW), the total cost is around 172 US dollars (around 230,000 KRW). When it is compared to other highend quality haptic gloves that are sold commercially, it is made at a significantly lower cost.

When it comes to the weight of gloves, our Haptic Glove weighs a total of 356 g, which is not that light compared to other commercialized haptic gloves (Figure 15). The average weight of the 4 commercialized haptic gloves that support force haptic feedback was 385 g (Approximately HaptX Gloves G1: 450 g, SenseGlove Nova: 320 g, Dexmo: 320 g, VRluv: 450 g). Even with our Haptic Gloves total weight, 356 g, our Haptic Glove is slightly lighter than the average weight of other commercialized haptic gloves. However, our focusing part was to make the haptic system part as light as possible, which is worn on users hand, so that the user feels less uncomfortable of wearing it. Thanks to that effort, we were able to reduce the weight of the part that user wears on their hand up to 184 g.

The weight of our Haptic Glove. (left: 172 g Control system, middle: 356 g both, right: 184 g haptic feedback system).

However, our Haptic Glove needs to be functionally improved to increase its performance. The 20 ms delay should be shorter or omitted which is currently preventing a user from feeling the continuous kinesthetic force feedback. The current prototype uses a wire serial communication instead of wireless communication due to the performance and the instability of data communication problem between Arduino UNO and HC06 Bluetooth module. For a better immersion experience of the metaverse, the Haptic Glove should be considered to be wireless for the next prototype using another Bluetooth module that guarantees stability of data communication.

Due to the nature of the research field of Haptics, the result is usually evaluated by UX (user experience) rather than quantitative data. The four users who participated in the experiment, ‘User experience analysis for virtual elastic objects’, replied that the feeling of grabbing the solid ball was very realistic and they found it more immersive. They also mentioned when they interacted with the soft ball, they could feel the elasticity of the ball and the change of the resistant force according to the pressure they applied on the ball. However, they responded that due to the 20 ms delay they felt the force feedback was not as smooth compared to the feedback they expected to receive. The 20 ms delay is a quite big delay for haptic feedback because average people can distinguish a 500Hz fine vibration and the delay can cause an unnatural feeling (Booth et al., 2003). Despite the delay, they all responded that they experienced larger immersion with our Haptic Glove compared to using only LMC or only stop motion feedback. Additionally, EMG data were used for quantitative analysis, however, the result was different from our presumptions. It was also found that if the weight of the object is very light, the amount of EMG usage is not significantly related to the elasticity of the object.

The demo content, which were used in the EMG analysis for the relationship between haptic, fine motor development and rehabilitation, focuses on a block-moving fine motor development toy where the user picks up and moves certain color blocks to certain locations. As a result, the grab motion data were ranked in the order of LMC, Haptic Glove, touch screen, and Haptic TouchX. The pick motion data were ranked in the order of LMC, Haptic Glove and touch screen (joint second place), and Haptic TouchX.

This ranking is determined by how much EMG data of each interaction shows a similar tendency to the interaction with the real learning toys. Therefore it was confirmed that LMC and the Haptic Glove, which are most similar to the motion of picking up the actual object, show the highest similarity. However, this experiment is an analysis of how similar the tendency of fine motor data is, not how much more fine motor is used.

The interaction that showed the highest EMG signal was the Haptic TouchX, showing an overwhelmingly larger EMG signal than others. In this study, Haptic TouchX scored the lowest, however, this should not be interpreted as being the least helpful for fine motor development yet. To clarify this, follow-up research is necessary on the causal relationship between the higher EMG signal the better the development of fine motor, and the more sophisticated the use of fine motor skills.

In addition, there was no significant difference in the scores of LMC and Haptic Glove, however since LMC won first place in both grab motion and pick motion analysis, the follow-up research is necessary to confirm the accurate reason of said result. For the follow-up research, our Haptic Glove needs to be more sophisticated and a lot of data will need to be analyzed through more user interviews.

Through this study, this paper considers to succeed in creating a haptic glove that is inexpensive and can provide the elasticity of a deformable object. We also expect through this glove more people will be able to enjoy and experience the metaverse more immersively. It is expected to have a positive effect on the absence of Haptic, which is currently a disadvantage and a big obstacle to the metaverse. Lastly, as the price of our Haptic Glove is low, we hope it can be used in many countries and societies regardless of the capital power of the country and society and can have a more positive impact on the expansion of the metaverse altogether.